publications

* denotes equal contribution.

2025

-

Online Slip Detection and Friction Coefficient Estimation for Autonomous RacingChris Oeltjen*, Carson Sobolewski*, Saleh Faghfoorian*, Lorant Domokos, Giancarlo Vidal, and Ivan RuchkinUnder Review, 2025

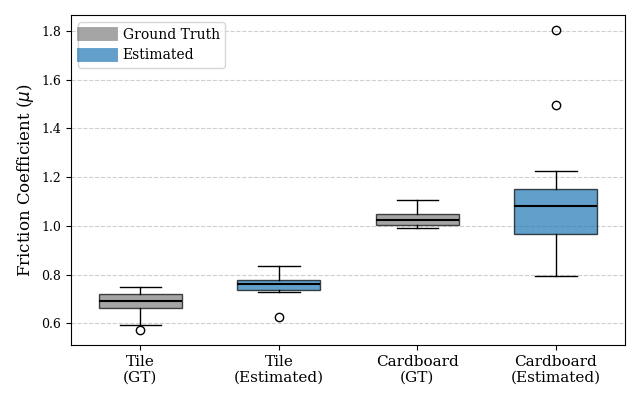

Online Slip Detection and Friction Coefficient Estimation for Autonomous RacingChris Oeltjen*, Carson Sobolewski*, Saleh Faghfoorian*, Lorant Domokos, Giancarlo Vidal, and Ivan RuchkinUnder Review, 2025Accurate knowledge of the tire-road friction coefficient (TRFC) is essential for vehicle safety, stability, and performance, especially in autonomous racing, where vehicles often operate at the friction limit. However, TRFC cannot be directly measured with standard sensors, and existing estimation methods either depend on vehicle or tire models with uncertain parameters or require large training datasets. In this paper, we present a lightweight approach for online slip detection and TRFC estimation. Our approach relies solely on IMU and LiDAR measurements and the control actions, without special dynamical or tire models, parameter identification, or training data. Slip events are detected in real time by comparing commanded and measured motions, and the TRFC is then estimated directly from observed accelerations under no-slip conditions. Experiments with a 1:10-scale autonomous racing car across different friction levels demonstrate that the proposed approach achieves accurate and consistent slip detections and friction coefficients, with results closely matching ground-truth measurements. These findings highlight the potential of our simple, deployable, and computationally efficient approach for real-time slip monitoring and friction coefficient estimation in autonomous driving.

-

Quantifying the Reliability of Predictions in Detection Transformers: Object-Level Calibration and Image-Level UncertaintyYoung-Jin Park*, Carson Sobolewski*, and Navid AzizanUnder Review, 2025

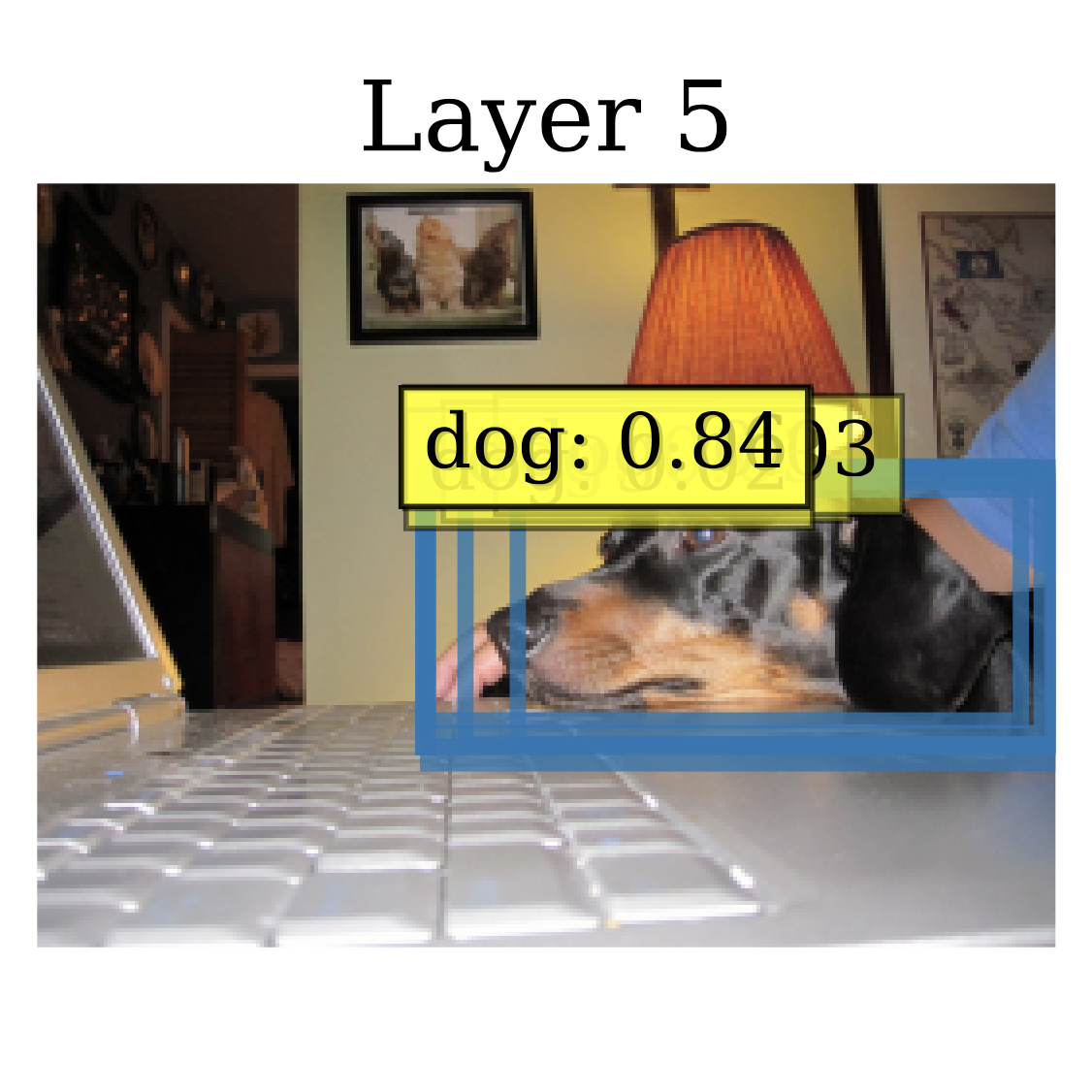

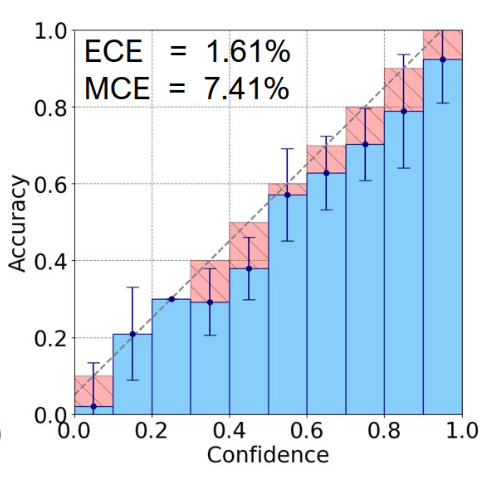

Quantifying the Reliability of Predictions in Detection Transformers: Object-Level Calibration and Image-Level UncertaintyYoung-Jin Park*, Carson Sobolewski*, and Navid AzizanUnder Review, 2025DEtection TRansformer (DETR) and its variants have emerged as promising architectures for object detection, offering an end-to-end prediction pipeline. In practice, however, DETRs generate hundreds of predictions that far outnumber the actual objects present in an image. This raises a critical question: which of these predictions could be trusted? This is particularly important for safety-critical applications, such as in autonomous vehicles. Addressing this concern, we provide empirical and theoretical evidence that predictions within the same image play distinct roles, resulting in varying reliability levels. Our analysis reveals that DETRs employ an optimal “specialist strategy”: one prediction per object is trained to be well-calibrated, while the remaining predictions are trained to suppress their foreground confidence to near zero, even when maintaining accurate localization. We show that this strategy emerges as the loss-minimizing solution to the Hungarian matching algorithm, fundamentally shaping DETRs’ outputs. While selecting the well-calibrated predictions is ideal, they are unidentifiable at inference time. This means that any post-processing algorithm—used to identify trustworthy predictions—poses a risk of outputting a set of predictions with mixed calibration levels. Therefore, practical deployment necessitates a joint evaluation of both the model’s calibration quality and the effectiveness of the post-processing algorithm. However, we demonstrate that existing metrics like average precision and expected calibration error are inadequate for this task. To address this issue, we further introduce Object-level Calibration Error (OCE), which evaluates calibration by aggregating predictions per ground-truth object rather than per prediction. This object-centric design penalizes both retaining suppressed predictions and missed ground truth foreground objects, making OCE suitable for both evaluating models and identifying reliable prediction subsets. Finally, we present a post hoc uncertainty quantification (UQ) framework that predicts per-image model accuracy.

-

Generalizable Image Repair for Robust Visual ControlCarson Sobolewski, Zhenjiang Mao, Kshitij Vejre, and Ivan RuchkinIEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025

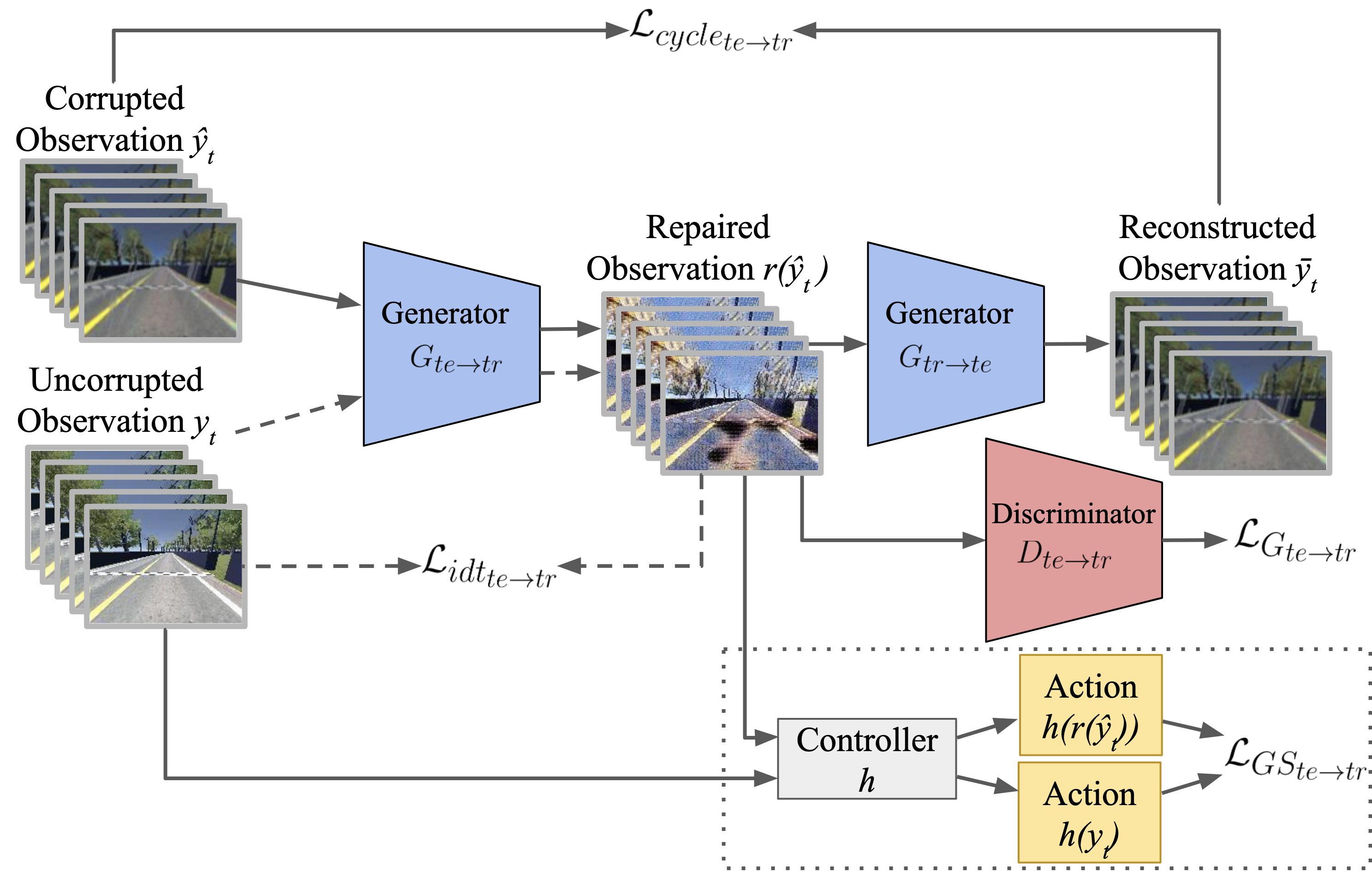

Generalizable Image Repair for Robust Visual ControlCarson Sobolewski, Zhenjiang Mao, Kshitij Vejre, and Ivan RuchkinIEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025Vision-based control relies on accurate perception to achieve robustness. However, image distribution changes caused by sensor noise, adverse weather, and dynamic lighting can degrade perception, leading to suboptimal control decisions. Existing approaches, including domain adaptation and adversarial training, improve robustness but struggle to generalize to unseen corruptions while introducing computational overhead. To address this challenge, we propose a real-time image repair module that restores corrupted images before they are used by the controller. Our method leverages generative adversarial models, specifically CycleGAN and pix2pix, for image repair. CycleGAN enables unpaired image-to-image translation to adapt to novel corruptions, while pix2pix exploits paired image data when available to improve the quality. To ensure alignment with control performance, we introduce a control-focused loss function that prioritizes perceptual consistency in repaired images. We evaluated our method in a simulated autonomous racing environment with various visual corruptions. The results show that our approach significantly improves performance compared to baselines, mitigating distribution shift and enhancing controller reliability.

-

A Framework for PCB Design File Reconstruction from X-ray CT AnnotationsCarson Sobolewski, David Koblah, and Domenic ForteInternational Symposium on Quality Electronic Design (ISQED), 2025

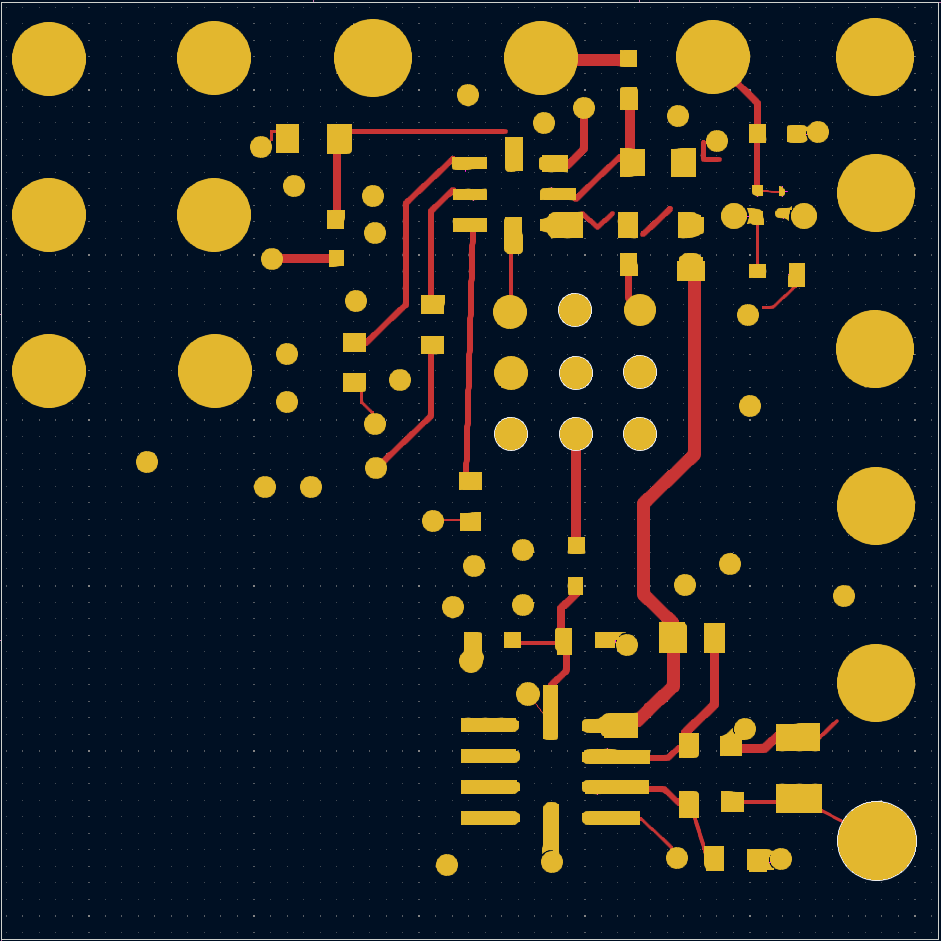

A Framework for PCB Design File Reconstruction from X-ray CT AnnotationsCarson Sobolewski, David Koblah, and Domenic ForteInternational Symposium on Quality Electronic Design (ISQED), 2025Reverse engineering (RE) is often used in security critical applications to determine the structure and functionality of various systems, including printed circuit boards (PCBs). Although it has both beneficial and malicious uses, it is particularly vital within the realm of hardware trust and assurance. PCB RE enhances legacy electronic system replacement, intellectual property (IP) protection, and supply chain integrity. To contribute to the requirements of effective PCB RE, extensive research has been conducted on the analysis of PCBs using X-ray computed tomography (CT) scans, including image segmentation focusing on via and trace annotation. Applying extracted annotations, this work outlines a Python-based framework, coupled with the open-source KiCAD software, for the automated reconstruction of PCB design files. Given the via, pad and trace annotations, in addition to board dimensions, the algorithm automatically recognizes board shape, trace size, and connections to reconstruct the bare PCB accurately. This technique was tested on three distinct layers of a sample multilayer PCB with great success. Its feasibility holds great promise for future extensions to complete the entire PCB RE framework.

2024

-

How Safe Am I Given What I See? Calibrated Prediction of Safety Chances for Image-Controlled AutonomyZhenjiang Mao, Carson Sobolewski, and Ivan RuchkinLearning for Dynamics & Control (L4DC) Conference, 2024

How Safe Am I Given What I See? Calibrated Prediction of Safety Chances for Image-Controlled AutonomyZhenjiang Mao, Carson Sobolewski, and Ivan RuchkinLearning for Dynamics & Control (L4DC) Conference, 2024End-to-end learning has emerged as a major paradigm for developing autonomous systems. Unfortunately, with its performance and convenience comes an even greater challenge of safety assurance. A key factor of this challenge is the absence of the notion of a low-dimensional and interpretable dynamical state, around which traditional assurance methods revolve. Focusing on the online safety prediction problem, this paper proposes a configurable family of learning pipelines based on generative world models, which do not require low-dimensional states. To implement these pipelines, we overcome the challenges of learning safety-informed latent representations and missing safety labels under prediction-induced distribution shift. These pipelines come with statistical calibration guarantees on their safety chance predictions based on conformal prediction. We perform an extensive evaluation of the proposed learning pipelines on two case studies of image-controlled systems: a racing car and a cartpole.